Revolutionizing the DevOps landscape with Dockerization heralds a groundbreaking transformation in the realm of enhancing and optimizing the software development lifecycle. Docker, a pivotal cornerstone in the ever-evolving tech domain, bestows upon us the ability to encapsulate not only applications but also their corresponding environments into autonomous containers. This trailblazing approach simplifies the intricate facets of application management, distribution, and execution, ultimately magnifying the overall efficiency and effectiveness of DevOps methodologies.

The infusion of Docker into the very core of DevOps workflows endows organizations with the capability to achieve a seamless integration of integration, testing, delivery, and deployment. This strategic adoption acts as a potent antidote to the persistent challenges arising from discrepancies in environments and heavy reliance on complex dependencies.

The Essence of Docker in DevOps

Delving into the Realm of Containerization

Containerization, a cornerstone concept in the contemporary landscape of DevOps, stands as a transformative force within the sphere of software development and deployment. At its core, containerization entails the encapsulation of an application along with its entire ecosystem, thereby endowing it with the capability to execute consistently across a diverse array of computational environments. This revolutionary paradigm shift effectively dismantles the pervasive dilemma of “it works on my machine,” which has long plagued software developers. With containerization, applications exhibit uniform behavior, irrespective of their deployment destinations. Let us embark on an in-depth exploration of this concept:

Advantages of Containerization:

- Consistency: Containerization serves as an ironclad assurance that an application operates in an identical manner, whether it resides on a developer’s personal laptop, a testing server, or in a production environment. Bid farewell to the enigmatic bugs that mysteriously manifest exclusively in specific contexts;

- Isolation: Containers are staunchly segregated from one another and from the host system. This segregation acts as a safeguard against conflicts among distinct applications, ensuring that the actions of one container do not encroach upon another;

- Portability: Containers can be fluidly transported across a spectrum of computational domains, be it on on-premises servers, cloud platforms, or developer workstations. This inherent portability streamlines the processes of deployment and scalability;

- Resource Efficiency: Containers collaborate by sharing the kernel of the host operating system, rendering them exceptionally lightweight and resource-efficient. A multitude of containers can be simultaneously executed on a single host without incurring a substantial performance bottleneck;

- Version Control: Containerization empowers you to delineate your application’s environment via code, typically manifested within a Dockerfile. This capability translates into the ability to exercise version control over your application’s infrastructure, ensuring the replicability of configurations and settings.

Docker Components in DevOps

Now, let’s delve into the key components of Docker that make it a DevOps powerhouse:

Dockerfile

A Dockerfile is like the recipe for baking a container. It’s a script that contains a series of Docker commands and parameters, specifying how to create a Docker image. Here’s why it’s essential:

Why Dockerfile Matters:

- Repeatability: Dockerfiles ensure that anyone can replicate the exact environment required for your application by simply following your instructions;

- Version Control: Dockerfiles are text-based, making them easy to version-control alongside your application’s code. This ensures that changes to the environment are tracked and managed effectively;

- Customization: Dockerfiles allow you to customize your container’s environment precisely to suit your application’s needs.

Docker Image Foundations

Docker images act as the architectural groundwork for containers, serving as the bedrock from which containers spring to life. To understand more, let’s explore further:

Understanding the Importance of Docker Images:

- Steadfast Infrastructure: Docker images are configured to be read-only, maintaining their state once established and through the runtime, thereby endorsing the concept of steadfast infrastructure;

- Interoperable Design: Such images can be interchanged, reapplied, and improved upon, quickening the developmental processes and assuring uniformity throughout various phases of the deployment continuum.

Docker Containers: Revolutionizing Software Deployment

Welcome to the world of Docker containers, a place where innovative transformations take place. These containers are the vibrant, functional manifestations of Docker images. Now, let’s understand why these containers are seen as revolutionary:

Unlocking the Core of Docker Containers:

- Supreme Segregation: Containers provide a supreme level of segregation, ensuring applications operate autonomously, unaffected by the inconsistencies of the host system or other containers. This eliminates the frustrating dilemma of incompatible dependencies;

- Autonomous Operation: Containers hold the distinctive capability to be started, stopped, and destroyed autonomously, allowing for nimble scalability and wise resource distribution, unlocking numerous possibilities;

- Smart Resource Management: Containers are proficient in resource management, leveraging the host operating system’s kernel. This intelligent resource-sharing model results in optimized resource usage, enabling the seamless operation of multiple containers on a single host;

- Built-in Scalability: Containers have intrinsic scalability, making them ideally suited to meet the needs of modern, fluctuating applications.

Unlocking the Power of Docker Integration with DevOps Tools

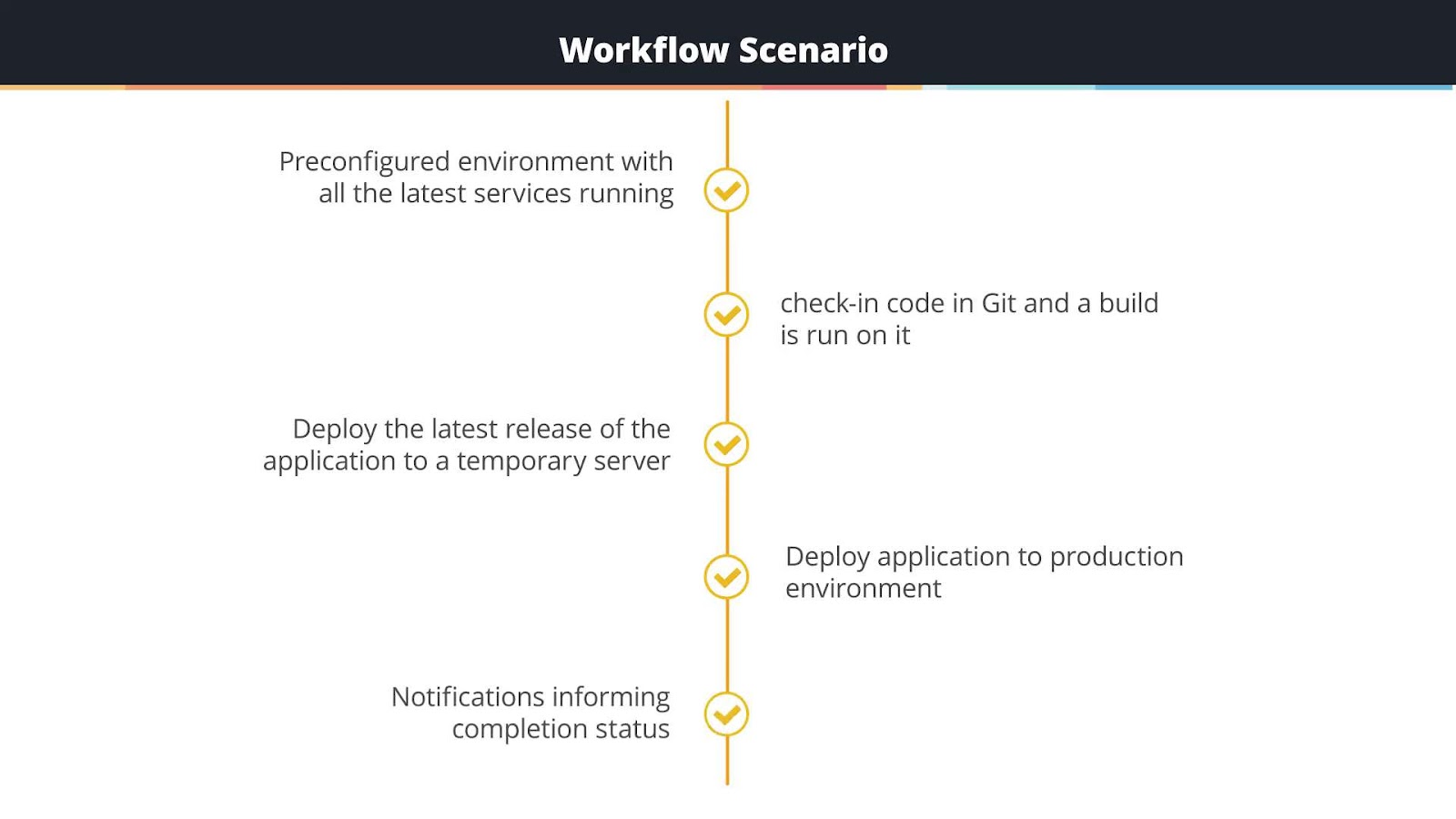

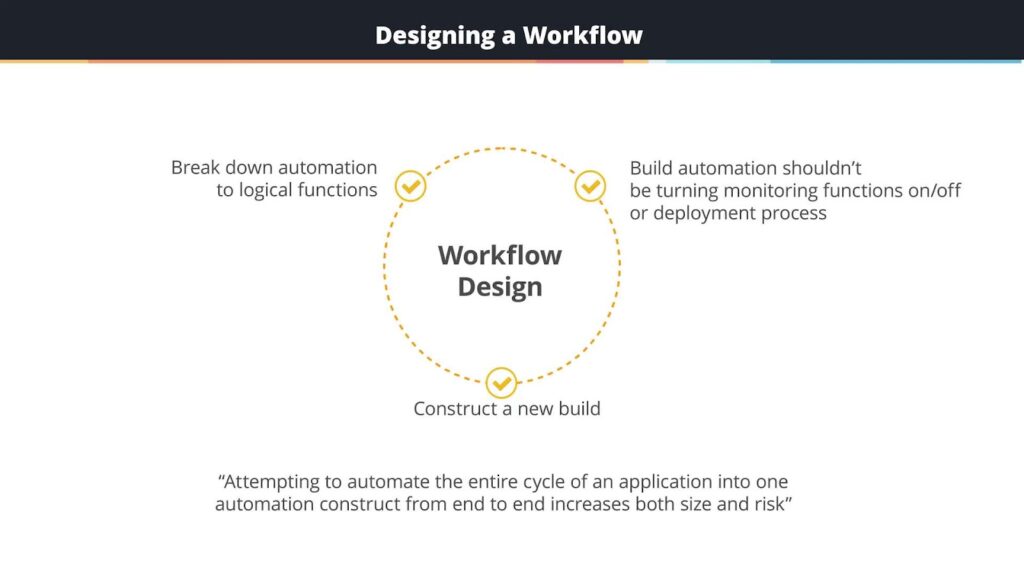

Docker, the transformative force in the realm of containerization, seamlessly integrates with a diverse array of esteemed DevOps tools, presenting a plethora of opportunities to streamline the intricacies of development workflows. Among these integrations, one standout collaboration involves Jenkins, a venerable champion in the DevOps arena. It empowers the establishment of Continuous Integration/Continuous Deployment (CI/CD) pipelines, ushering in automation for the phases of building, testing, and deploying applications. In this exploration, we shall navigate the intricate tapestry of this dynamic synergy and uncover how Docker can significantly enhance your DevOps endeavors.

Continuous Integration: The Accelerator for Development

Continuous Integration (CI) has evolved into the life force propelling modern software development, and Docker stands as a linchpin in fortifying its efficacy. Here’s an elucidation of how Docker contributes:

- Isolated Testing Environments: Docker grants developers the ability to effortlessly forge insulated and uniform testing environments. Consequently, tests executed within these encapsulated containers faithfully replicate the production environment, simplifying the identification and rectification of anomalies at an early juncture in the development cycle;

- Accelerated Feedback Loop: With Docker, the feedback loop is supercharged. Developers receive prompt feedback on their code, allowing them to make necessary adjustments swiftly. This not only improves the quality of the code but also boosts development efficiency.

Continuous Deployment: Ensuring Smooth Sailings

Continuous Deployment (CD) is all about delivering software reliably and swiftly. Docker lends a helping hand in this regard by ensuring that applications are deployed seamlessly and with minimal hiccups:

Enhancing Reliability through Containerization: Docker, through the process of containerization, guarantees a streamlined deployment experience. It achieves this by encapsulating applications within containers that faithfully replicate the testing environment, thus mitigating deployment errors and minimizing periods of system unavailability.

Strategies for Effective Dockerization

Now, let’s explore some strategies that can transform your Dockerization process into a work of art:

1. Efficient Image Building: Crafting Docker Images with Finesse

- Layer Optimization: Minimizing the number of layers in a Docker image is essential. Fewer layers mean quicker image builds and reduced resource utilization;

- Cache Utilization: Leveraging caching efficiently during image construction can dramatically cut down deployment times. Don’t rebuild what you don’t have to!

2. Managing Volumes: Taming Data for Stateful Applications

- Data Persistence: Docker volumes come to the rescue for stateful applications. They allow data to persist between container restarts, ensuring that critical information isn’t lost in the process;

- Data Sharing: Volumes also facilitate data sharing among containers, promoting efficient data management in complex application architectures.

3. Networking Considerations: Bridging the Container Divide

- Effective Network Configuration: Properly configuring network settings within Docker is crucial for secure and efficient communication between containers and external systems;

- Microservices Harmony: In a microservices architecture, this becomes even more critical, as containers must seamlessly interact to provide the desired functionality.

Strengthening Security in DevOps Workflows through Dockerization

Ensuring robust security within Dockerized DevOps workflows is paramount to safeguarding sensitive information and preserving the integrity and reliability of applications. Embedding stringent security protocols is crucial. This involves the consistent updating of images, utilization of signed images, enforcing access limitations, and meticulous scanning for potential vulnerabilities, all converging to fabricate a secure, containerized ecosystem. The adoption of these security measures is crucial to counteract potential security breaches and unauthorized data access, and to fortify the operational excellence of the DevOps environment.

Comprehensive Case Studies

1. Unparalleled Scalability

Incorporating Docker within DevOps processes allows enterprises to attain unparalleled scalability, facilitating effortless management of increased loads. This is achievable through the instantaneous and adaptive deployment of additional containers in accordance with the fluctuating demands. The ability to scale rapidly and efficiently is vital for organizations to maintain service continuity and performance stability, thereby accommodating growing user bases and varying workloads with ease and precision.

2. Enhanced Flexibility and Superior Portability

Docker’s encapsulation feature grants developers the latitude to operate within varied environments, thus magnifying operational flexibility. This flexibility enables seamless migration of applications across disparate cloud landscapes and through diverse stages of development, testing, and production. Enhancing portability in this manner is instrumental in optimizing developmental workflows and ensuring that applications maintain consistent performance and functionality across different platforms and environments. This increased flexibility and portability also reduce the likelihood of compatibility issues and facilitate smoother and more efficient developmental cycles.

3. Optimal Cost Efficiency

Docker plays a pivotal role in optimizing organizational resource utilization, thereby significantly diminishing the requisite for supplementary infrastructure investments. This optimization ensures that organizations can minimize operational expenditures while maximizing returns on investment. Docker achieves this by enabling more efficient use of system resources, reducing overhead costs associated with maintaining multiple environments, and allowing for better allocation of computing resources. The resultant cost efficiencies not only bolster the financial health of organizations but also allow for the reallocation of resources to more critical areas, fostering innovation and strategic advancement.

Further Insights and Recommendations

It is imperative for organizations employing Dockerized DevOps workflows to continuously monitor and refine their security postures and operational strategies. Regularly conducting comprehensive security assessments and staying abreast of the latest security trends and threats are essential components in maintaining a resilient and secure containerized environment.

Additionally, leveraging advanced tools and technologies that integrate seamlessly with Docker can further enhance the scalability, flexibility, portability, and cost-efficiency of DevOps workflows. This empowers organizations to sustain competitive advantage, adapt to evolving market dynamics, and maintain high levels of customer satisfaction through continuous delivery of high-quality software solutions.

Organizations are encouraged to explore diverse Docker configurations and deployment strategies to identify the most effective and efficient solutions tailored to their unique operational needs and objectives. By doing so, they can optimize their DevOps workflows and ensure long-term sustainability and success in an increasingly digital and dynamic business landscape.

Conclusion

The inclusion of Docker into DevOps processes signifies a pivotal transformation in the realm of software development, delivery, and deployment methodologies. This revolutionary tool provides a remedy for the myriad of obstacles encountered by both development and operations teams, encompassing issues like incongruent environments, intricate dependencies, and resource allocation complexities.

Through the incorporation of Docker, enterprises have the opportunity to refine their DevOps workflows, thereby attaining a heightened degree of agility, scalability, and efficiency. These attributes stand as imperative requisites in the contemporary, rapidly evolving technological landscape.